Issue Type: issue

Status: closed

Reported By: btasker

Assigned To: btasker

Milestone: v0.2

Created: 11-Feb-23 09:32

Labels:

Fixed/Done

Improvement

Description

As noted in #19 we don't currently write any points in for empty time windows - in fact, we don't even know about them because the Flux query doesn't include them in the results.

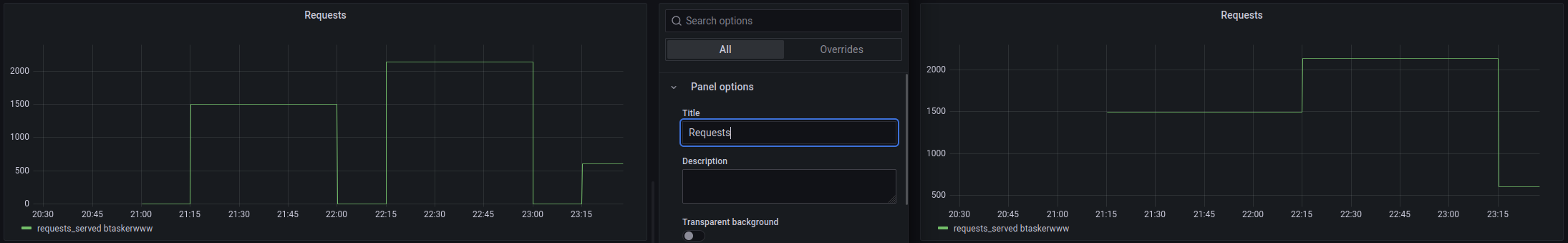

The result of this is that we end up with graphs that look different to those generated for the same time period using Kapacitor Flux Tasks

The query for that graph uses fill(usePrevious: true) and Kapacitor had inserted a point with value 0 for 22:00, where we did not.

Activity

11-Feb-23 09:32

assigned to @btasker

11-Feb-23 09:33

I'm marking this as being for the next release because it probably should be considered a blocker - there shouldn't be that marked of a difference in graphing results just because the downsampling engine has changed.

11-Feb-23 09:35

So, we can adjust the selection query to ensure that empty windows are generated

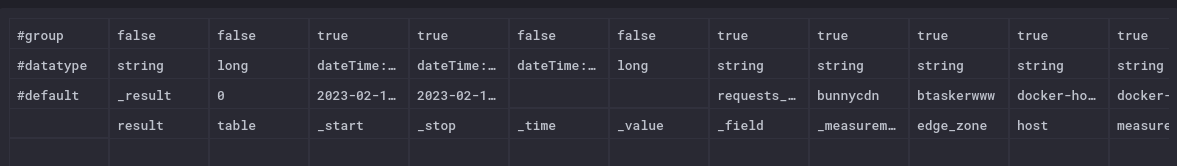

The result is that some of the tables are empty

We need to detect that this is the case, and then extract the default values (which hopefully the client makes available) in order to populate the non-value columns.

11-Feb-23 09:53

OK, so the following script demonstrates the principle

The query returns 3 windows:

Only 2100 has any points in it.

The output of the script looks like this:

The values are empty for the table with data - there's actual data, so defaults don't get provided.

We can adjust the logic to only touch the tables we want as follows

11-Feb-23 09:54

OK, so we've got the ability to fetch empty windows and generate a dummy row for them.

What we need to think about now, though, is what the ramifications on later processing are.

As an easy example, if we inject a dummy row, the

countaggregate is going to report1instead of0.11-Feb-23 09:58

There are some interesting ones to consider, actually, and they also highlight another potential problem.

Certain aggregates (

stddevfor example) require that there be at leastnrows in order to function. So, if there are one (or none), we're not going to write a point into upstream.If we don't write a point into upstream, then graphing issues may potentially occur.

That needs wider consideration, but the obvious initial question is:

When we generate our dummy point, do we in fact need to generate two (to allow

stdevto fire)?11-Feb-23 10:19

I've adjusted my mock script to build a record where none exist

11-Feb-23 10:27

mentioned in commit 039ff163fcd394091fea84ba5716f1bb35a3e005

Commit: 039ff163fcd394091fea84ba5716f1bb35a3e005 Author: B Tasker Date: 2023-02-11T10:27:07.000+00:00Message

feat: Add code to enable the inclusion/population of empty windows utilities/python_influxdb_downsample#20

11-Feb-23 10:31

Aggregate changes:

count

Count will override it's return to 0 if dummies were generated

last / first

I feel like there's a philosophical issue with these inserting anything if there was no data there. But, I've left them as-is for the time being (so they will return 0).

11-Feb-23 10:32

mentioned in commit fece2f7d813a124147418d12536720ef45b1a6ae

Commit: fece2f7d813a124147418d12536720ef45b1a6ae Author: B Tasker Date: 2023-02-11T10:31:55.000+00:00Message

Update aggregates to account for presence of dummies utilities/python_influxdb_downsample#20

11-Feb-23 10:45

mentioned in commit 4fc56af084db1de110dbb01291f6745abc2dba2d

Commit: 4fc56af084db1de110dbb01291f6745abc2dba2d Author: B Tasker Date: 2023-02-11T10:36:47.000+00:00Message

feat: Insert a 0 point for empty windows by default (utilities/python_influxdb_downsample#20)

This introduces a new job level config variable:

window_create_emptyIf enabled, we'll tell Flux to include tables for empty periods and will construct dummy points in order to allow us to return 0 (or 0.0, or "" etc depending on type) to the upstream for that time

11-Feb-23 10:45

mentioned in commit 2d93ef3eb569924c8368e0638a35f7465dd3837d

Commit: 2d93ef3eb569924c8368e0638a35f7465dd3837d Author: B Tasker Date: 2023-02-11T10:44:27.000+00:00Message

Disable dummy creation for now utilities/python_influxdb_downsample#20

Found an issue.

The

_startand_stopvalues in the dummy records are being set to the bounds of the query, rather than the bounds that the window would cover.11-Feb-23 23:21

mentioned in commit fa268851f841a3f9b9ab97d86a67f361652f4f37

Commit: fa268851f841a3f9b9ab97d86a67f361652f4f37 Author: B Tasker Date: 2023-02-11T23:18:44.000+00:00Message

Re-enable dummy creation, located the source of the issue utilities/python_influxdb_downsample#20

The timestamps weren't being set incorrectly, there was some misleading output.

The initial issue was correct though, generated empty windows weren't being output for the metric being checked.

The metric uses the

maxaggregate, which performs the following test to verify there's a value to work withThe problem is, 0 will evaluate to False.

11-Feb-23 23:28

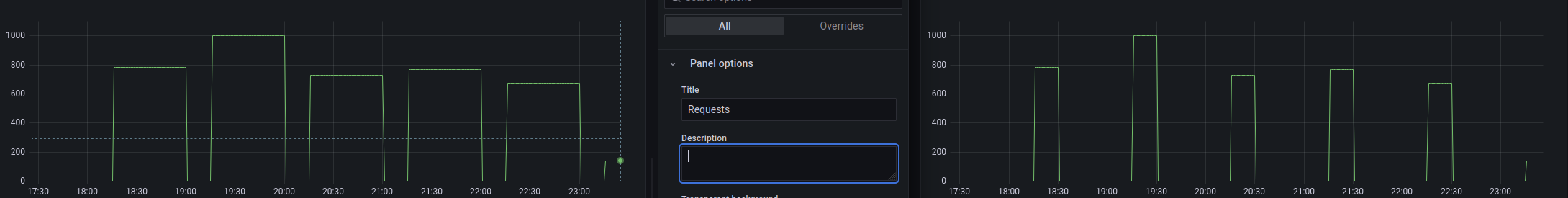

Curiously, we still get a difference in the graphs

If we turn

fill()off though, we can see why.There are more points on the graph on the right (created by the changes in this ticket), it looks like Flux-tasks create the empty/0 points less reliably, so

fill(usePrevious: true)results in fatter bars.Although unexpected, I don't think that this points to an issue in the downsampler - I'd be concerned if there were non-0 values missing, but having a 0 point for each empty 15 min window is exactly the aim of this ticket.

11-Feb-23 23:30

mentioned in issue #19

12-Feb-23 10:10

mentioned in issue #23

08-May-23 09:21

mentioned in issue #25

08-May-23 11:27

It's worth noting that this caused issues in #25 and had to be disabled in the job by setting

It caused issues because of a combination of issues:

aggregateWindow(every: v.windowPeriod, fn: mean)The result was that there were 15 minute windows were the value was 0. The graph itself was using a 30 minute

windowPeriodand so we effectively took the correct value and divided by 2, giving an incorrect reading.Technically, it was a configuration issue - it was made possible by the sampling interval in the raw data being the same as the downsample window, but it's worth noting as a possible outcome of this.

It was, however, possible to correct it for historic data by filtering those

0values out in the query